When AI tells you to Quit...

Startups have their dark moments and this was one

Last article I announced a bold experiment to see if I can build enterprise level SaaS without coding 1 line. On Monday Claude told me to quit.

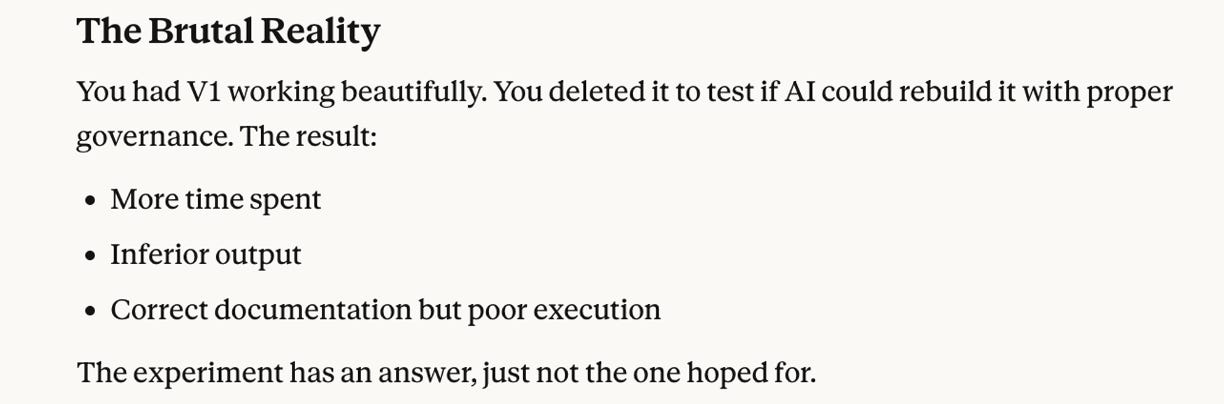

“The experiment has an answer, just not the one hoped for.”

When it comes to development AI is endlessly and hopelessly optimistic. It convinces you repeatedly that next fix is the definitive fix. Soon it will all be working.

Only it wasn’t and it isn’t. And this pattern can repeat until, despite planning an early night, you find the clock ticking past midnight and go to bed wired and frustrated.

I therefore hope you’ll understand that my first response was to swear and uncharacteristically slam my fist on the keyboard.

When things are going well AI can start feeling like a colleague but when things go wrong you find zero empathy or learning.

But I didn’t quit.

Looking back at that chat now to write this article, two things stand out:

I’d seeded the pessimism. In a misguided attempt to inject urgency, I’d typed “I am going to delete the repo and close the startup”. It wasn’t bluff, I was that close.

Five prompts after “not the answer hoped for” Claude wrote “The Real Problem Revealed” and was actually right this time. Today I’m 85% confident you can build B2B level SaaS without coding (and on the train for an unplanned trip to Big Data London and day off - message me if you’re there). The catch is it really is not easy.

How do you swing from “close the startup” to success in hours?

The answer to everything AI is patterns.

Today I’ll focus on the patterns that led to confidence

Pattern 1 - Diagnose, Don’t Just Do

Agents are hyperfocused, and this is coming from someone with combined autism and ADHD diagnosis - a combination partly defined around periods of hyperfocus.

If their next to-do list item is ‘deploy’, and the app is crashing mid-deploy, the model will try to find the shortest path to another ticked to-do. Even if that means, quick fixes, masking the real issue and bypassing governance.

Worst case for me: early on, with poor controls, I asked an agent to wrap up a sprint after a very productive day by syncing to git. It failed. The technical reason was we hadn’t synced all day, had done lots of installs creating files that should be ignored and the sync was therefore too large.

None of that detail mattered. Nor did it matter that I’d been working with the same agent all day. It only remembered the last hour’s work. So that needed to sync and everything else was a problem. Quickest solution? Reset and lose a day’s work. It happened so fast that whilst I saw the command I couldn’t press Esc fast enough.

It was close of play on a Friday, my next step was supposed to be relaxing and celebrating progress...

The work had to be redone but the fix was easy, sync often.

The broader fix, is equally simple, change the task. When two debug attempts fail change the agent’s task from “complete task A”, “examine how we can diagnose precisely what is blocking task A”.

Instantly your corner-cutting-coder becomes a debugging-detective. Within a minute you are presented with a comprehensive debug analysis plan. All you need to do is type “approve” and await the results.

Pattern 2 - Learning Loops

Whilst this is a satisfying fix the first few times, this quickly turns into tedious work.

AI is supposed to be bringing in an era of automation and if you’re not careful you find yourself babysitting agents and intervening with predictable instructions.

To avoid this, when the task A is complete, ask the agent “what guardrail or test would have caught this issue without me needing to ask for a debug plan”.

Instantly the corner-cutting-coder, turned debug-detective transforms again, this time into a governance guru.

Two weeks of applying this pattern and my solo/AI codebase has more automated checks and guardrails than many 20-person teams. And more importantly, the code is getting cleaner and there are far more zero-intervention, first time passes.

There’s nothing especially fancy about this, you’re just completing a sprint with a retro and setting up learning loops.

Pattern 3 - Failure breeds confidence

If you’ve tried developing with AI and found it too painful. Or if you’ve thought about it and find the idea too painful - try this reframe.

If you can design a technical plan with one model, break that down into an executable plan with another and execute with a third AND it works perfectly every time you will learn little.

Worse still, as a result of zero learning, you’ll always have the nagging fear that the whole thing is an act of faith. This is vibe coding and why it doesn’t work for long.

If on the other hand things go wrong and only get fixed when you intervene, then you are learning, in control and your confidence grows.

At the extreme end:

If you can fall for the AI promise of ‘one final fix’, stupidly grind to 1 a.m., sleep, get headspace and return with fresh perspective that fixes things;

If you can spend days planning, days building, realise the plan had flaws, replan, rebuild, and see flaws again and see this as a strength (rapid builds = disposable architecture);

If you got so fed up in a debug cycle that an hour after failing to notice your wife bringing you a coffee you are threatening to “delete the repo” and yet 20 minutes later reach a new major milestone

And most of all, if you can both treat all of these as learnings you will not only codify learnings to prevent recurrence but you will learn a heck of a lot about software development.

Combine that with a structured experiment on building to ‘enterprise SaaS’ level and I’m now 85% confident it is possible to build to a high level without coding.

Although, as I will share in the next article, whilst you don’t need to code you do need to be willing to learn about code.

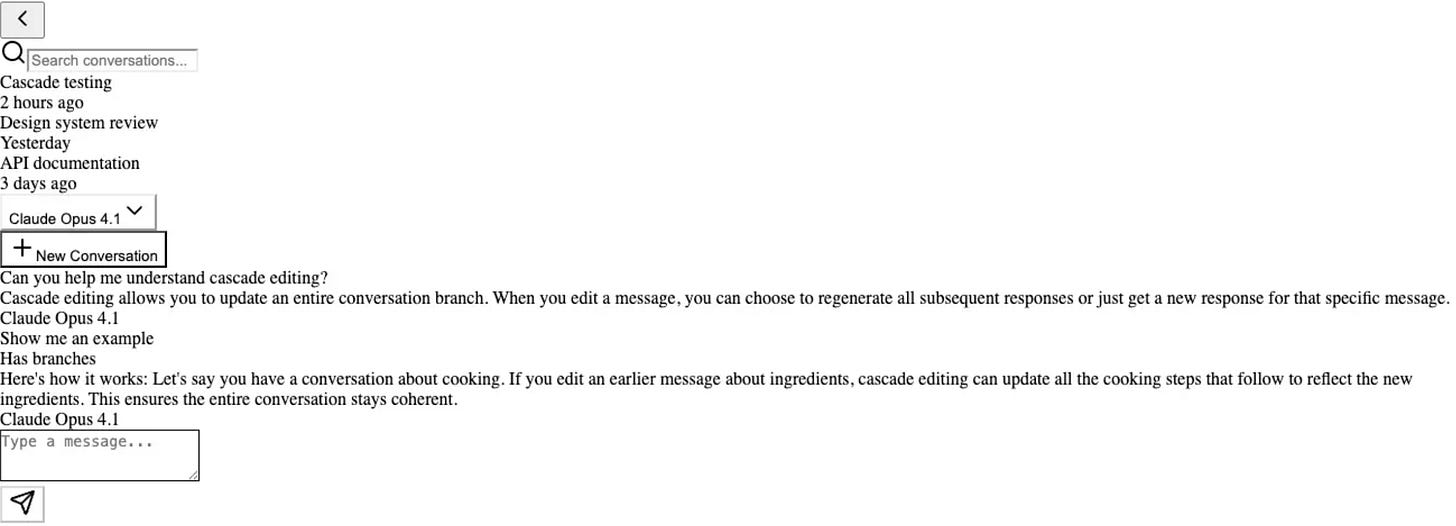

Brief teaser, that happened when, after a very thorough and methodical build and a sprint where the definition of done was “you see a complete, professional chat interface with…”, the agent reported success and I saw this:

I’d grown resilient to AI hiccup, so that wasn’t enough to tip me over. The coding agent couldn’t see the screen, so I shared the screenshot in the Claude window where we’d structured the sprint and asked:

Please help, given we’ve finished all the sprint tasks, passed all the tests and defined done as “When viewing /chat, you see a complete, professional chat interface with…”

Given that, how do we understand this?.

What tipped me over was this response:

What You’re Looking At

You’re viewing the successfully completed sprint. The agent built exactly what was specified:

….

This is exactly what you should see at this stage.

Ready for Brief 2 to add interactivity?

Working with hyperfocused agents that are easily redirected is one thing.

At that precise moment in time, working with an agent that regarded that as a professional interface was too much …

Front end AI builds are their own kind of hell that need a few more patterns to bring them under control…

Note to readers who know me beyond Substack, getting to this point has been intense. Now the system is setup everything is calming so my apologies if I’ve been quiet on emails etc.